ManipGen Enables Robots To Manipulate New Objects in Diverse Environments

Media Inquiries

Clearing the dinner table is a task easy enough for a child to master, but it's a major challenge for robots.

Robots are great at doing repetitive tasks but struggle when they must do something new or interact with the disorder and mess of the real world. Such tasks become especially challenging when they have many steps.

"You don't want to reprogram the robot for every new task," said Murtaza Dalal(opens in new window), a Ph.D. student in the School of Computer Science's (SCS) Robotics Institute(opens in new window). "You want to just tell the robot what to do, and it does it. That's necessary if we want robots to be useful in our daily lives."

To enable robots to undertake a wide variety of tasks they haven't previously encountered, Dalal and other researchers at SCS and Apple Inc. have developed an approach to robotic manipulation called ManipGen(opens in new window) that has proven highly successful for these multistep tasks, known as long-horizon tasks.

The key idea, Dalal explained, is to divide the task of planning how a robotic arm needs to move into two parts. Imagine opening a door: The first step is to reach the door handle, next is to turn it. To solve the first problem, the researchers use well-established data-driven methods for computer vision and motion planning to locate the object and move a robotic arm's manipulator near the object. This method simplifies the second part of the process, limiting it to interacting with the nearby object. In this case, the door handle.

"At that point, the robot no longer cares where the object is. The robot only cares about how to grasp it," Dalal said.

Robots are typically trained to perform a task by using massive amounts of data derived from demonstrations of the task. That data can be manually collected, with humans controlling the robot, but the process is expensive and time consuming. An alternative method is to use simulation to rapidly generate data. In this case, the simulation would place the robot in a variety of virtual scenes, enabling it to learn how to grasp objects of various shapes and sizes, or to open and shut drawers or doors.

Dalal said the research team used this simulation method to generate data and train neural networks to learn how to pick up and place thousands of objects and open and close thousands of drawers and doors, employing trial-and-error reinforcement learning techniques. The team developed specific training and hardware solutions for transferring these networks trained in simulation to the real world. They found that these skills could be recombined as necessary to enable the robot to interact with many different objects in the real world, including those it hadn't previously encountered.

"We don't need to collect any new data," Dalal said of deploying the robot in the real world. "We just tell the robot what to do in English and it does it."

The team implements the two-part process by using foundation models such as GPT-4o that can look at the robot's environment and decompose the task — like cleaning up the table — into a sequence of skills for the robot to execute. Then the robot executes those skills, first estimating positions near objects using computer vision, then going there using motion planning, and finally manipulating the object using a depth camera to measure distances.

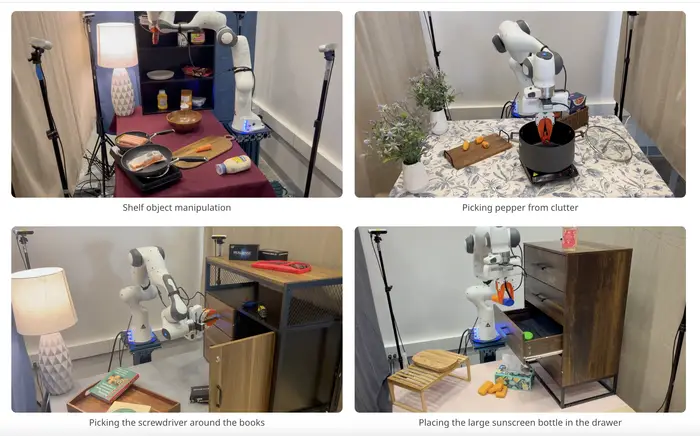

The researchers have applied their method to challenging multistage tasks such as opening drawers and placing objects in them or rearranging objects on a shelf. They have demonstrated that this approach works with robotics tasks that involve up to eight steps "but I think we could go even further," Dalal said.

Likewise, gathering data through demonstrations could enable this approach to be extended to objects that can't currently be simulated, such as soft and flexible objects.

"There's so much more to explore with ManipGen. The foundation we've built through this project opens up exciting possibilities for future advancements in robotic manipulation and brings us closer to the goal of developing generalist robots," said Min Liu, a master's student in the Machine Learning Department(opens in new window) and co-lead on the project.

Dalal and Liu outline ManipGen in a newly released research paper(opens in new window) they co-authored. The research team also included Deepak Pathak(opens in new window), the Raj Reddy Assistant Professor of Computer Science in the Robotics Institute; Ruslan Salakhutdinov(opens in new window), the UPMC Professor of Computer Science in the Machine Learning Department; and Walter Talbott, Chen Chen and Jian Zhang, research scientists at Apple Inc.

"ManipGen really demonstrates the strength of simulation-to-reality transfer as a paradigm for producing robots that can generalize broadly, something we have seen in locomotion, but until now, not for general manipulation," Pathak said.

Salakhutdinov, the principal investigator on the project, said ManipGen builds on research to enable robots to solve longer and more complicated tasks. "In this iteration," he said, "we finally show the exciting culmination of years of work: an agent that can generalize and solve an enormous array of tasks in the real world."

For more details, including the research paper and real-world deployment of ManipGen, visit the research website(opens in new window).