CMU, Meta Seek To Make Computer-based Tasks Accessible with Wristband Technology

Media Inquiries

As part of a larger commitment to developing equitable technology, Carnegie Mellon University and Meta announce a collaborative project to make computer-based tasks accessible to more people. This project focuses on using wearable sensing technology to enable people with different motor abilities to perform everyday tasks and enjoy gaming in digital and mixed reality environments.

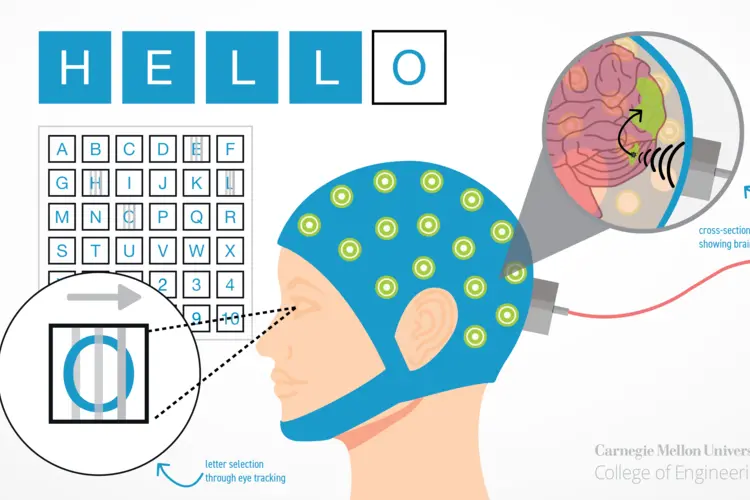

Meta’s research in electromyography uses sensors placed on the skin to measure the electrical signals the user generates through muscles in their wrist, which are translated into input signals for various devices. While Meta has already demonstrated that this technology could replace keyboards and joysticks, the team continues to invest and support different projects to confirm that this technology can be used by a wide range of people.

Douglas Weber(opens in new window), a professor in the Department of Mechanical Engineering(opens in new window) and the Neuroscience Institute(opens in new window) at Carnegie Mellon University, has shown previously that people with complete hand paralysis retain the ability to control muscles in their forearm, even muscles that are too weak to produce movement. His team found that some individuals with spinal cord injury still exhibit unique muscle activity patterns when attempting to move specific fingers, which could be used for human computer interactions.

“This research evaluates bypassing physical motion and relying instead on muscle signals. If successful, this approach could make computers and other digital devices more accessible for people with physical disabilities,” said Weber.

Working with Meta, Weber’s team seeks to build upon their initial results(opens in new window) to assess whether and to what extent people with spinal cord injury can interact with digital devices, such as computers and mixed reality systems, by using Meta’s surface electromyography (sEMG) research prototype and related software.

The project centers on interactive computing tasks. Approved by the Institutional Review Board, study participants begin by performing a series of adaptive mini games. Once their proficiency is benchmarked, the CMU team creates new games and other activities in mixed reality that are tailored to the abilities and interests of the participant.

“In the digital world, people with full or limited physical ability can be empowered to act virtually, using signals from their motor system,” explained Dailyn Despradel Rumaldo, a Ph.D. candidate at Carnegie Mellon University. “In the case of mixed reality technology, we are creating simulated environments where users interact with objects and other users, regardless of motor abilities.”

The project comes as an ongoing research investment(opens in new window) by Meta to support the development of equitable and accessible interfaces to help people do more, together.