SCS-Created Tool Uses an LLM To Train Mental Health Professionals

Media Inquiries

Therapists in training must learn how to identify markers of certain mental illnesses during patient sessions. Role-playing scenarios are critical for learning this skill, but limited resources and time mean that the students' peers often stand in as patients, which can lead to problems when trainees transition to real-world sessions.

Researchers in Carnegie Mellon University's School of Computer Science(opens in new window) (SCS) developed Patient-Psi, a simulated patient for cognitive behavioral therapy (CBT) training that could help bridge the gap between classroom learning and real-world experience.

The idea for Patient-Psi came from two of Zhiyu Zoey Chen's interests: CBT and large language models (LLM). Chen, an assistant professor at the University of Texas at Dallas, said she sometimes played around with LLMs, like ChatGPT, in her downtime. She would ask it to pretend to be a character and was delighted by how good it was at imitation and role-playing. While she was a post-doctoral student in SCS' Software and Societal Systems Department(opens in new window), Chen started learning about CBT and how therapists use cognitive modeling to identify and profile the mental illness of patients in a structured way.

"When I learned about the cognitive model in CBT, I came up with this idea of combining it with the strong language ability of LLMs," she said. "I want to explore how, perhaps, these LLMs could resemble real patients."

In an initial study, the research team spoke with CBT mental health professionals, who said their training — particularly role-playing activities — didn't adequately prepare them for how unpredictable and dynamic patients were in real-world sessions. One core skill mental health practitioners who use CBT need to develop is formulating a patient's cognitive model, a structured way to understand how someone's thoughts and beliefs impact their emotions and behaviors. For example, being stood up for a date might make someone feel unlovable or undesirable, which might make them self-isolate.

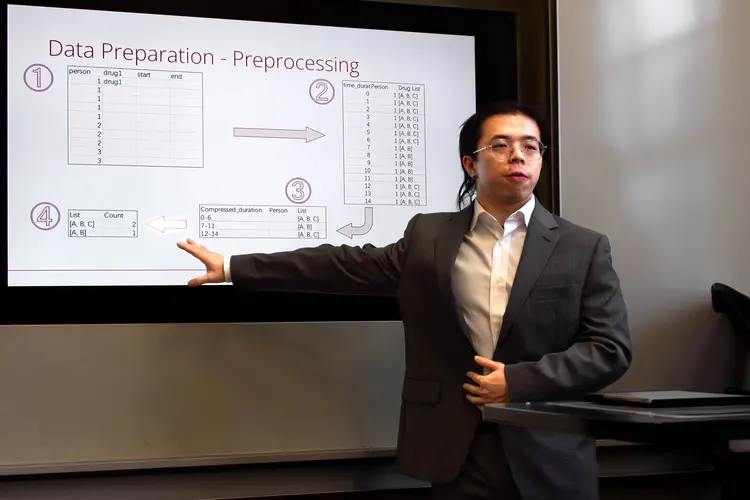

To build Patient-Psi, researchers worked with two clinical psychologists to create the tool's cognitive models. Researchers used GPT4-Turbo to summarize therapy session transcripts, which the psychologists used to create cognitive models. In total, they created 106 cognitive models for Patient-Psi.

Once the cognitive models were developed, the next challenge was enabling the LLMs to speak like a patient, said Ruiyi Wang, a doctoral student at the University of California San Diego who earned her master's degree in intelligent information systems in SCS' Language Technologies Institute(opens in new window).

"Large language models, like GPT-4, have good conversational abilities," Wang said. "They are not only capable of answering knowledge-based questions but can also engage in natural conversation if provided with correct instructions and careful supervision. We leveraged the conversational ability of LLMs to role-play and simulate patients with mental health conditions by incorporating the cognitive models into the conversational pipeline of Patient-Psi."

When training, users could choose from Patient-Psi's six conversation styles, such as verbose or upset, and Patient-Psi would select a cognitive model compatible with that style to initiate the conversational agent. The trainee using Patient-Psi would see the simulated patient's history and a session would begin. During the session, the trainee used their CBT therapy skills to identify and reconstruct the cognitive model assigned to the simulated patient at the session's start. Once the session ended, trainees received real-time feedback on how their reconstructed cognitive model matched the one used to program Patient-Psi.

Stephanie Milani, a doctoral student in the Machine Learning Department(opens in new window), said evaluating Patient-Psi was an interesting challenge because the research was in a novel and specialized area. As such, and because it was in a human-focused research area, there weren't existing metrics to compare their work against.

"There's a lot of thought that needs to be put into how to define your metrics and ensure that you're measuring the right things," Milani said. "We needed expert evaluations to understand where we were doing well and where we might need improvement. I think this type of evaluation trend could become more common as we get into these more niche or specific use cases of LLMs where there aren't existing evaluation benchmarks or metrics."

Patient-Psi hasn't been deployed for mental health practitioners in real-world training yet, but Milani and Chen plan to continue developing LLMs that function in the therapeutic space.