AI Expands Potential for Discovery in Physics

Carnegie Mellon physicists use AI to analyze large datasets from experiments, predict complex physical phenomena and optimize simulations

Media Inquiries

The long-standing interplay between artificial intelligence and the evolution of physics played a pivotal role in awarding the 2024 Nobel Prize in Physics to two AI trailblazers.

“AI for physics and physics for AI are concepts you hear,” said Matteo Cremonesi(opens in new window), an assistant professor of physics at Carnegie Mellon University. “The fact that the Nobel Prize went to AI pioneers is not surprising. It’s a recognition of something that has been going on for some time.”

The Royal Swedish Academy of Sciences awarded the 2024 Nobel Prize in Physics(opens in new window) to John J. Hopfield of Princeton University and Geoffrey E. Hinton of the University of Toronto in recognition of their foundational work in machine learning with artificial neural networks. Hinton served on the Computer Science Department(opens in new window) faculty at Carnegie Mellon from 1982-87.

“There’s been a lot of work in recent years in how we can use neural networks for scientific discovery in physics,” said Rachel Mandelbaum(opens in new window), interim head of Carnegie Mellon’s Department of Physics. “This is an important recognition in some of the precursor studies that set us along this pathway, and it’s an area where Carnegie Mellon is very active.”

Carnegie Mellon’s Department of Physics(opens in new window) has strong research groups working in astrophysics and cosmology, particle physics, biophysics, computational physics and theoretical physics, who play key roles in ongoing experiments in these areas.

Astronomical datasets

Mandelbaum leads research with the University of Washington to develop software to analyze large datasets generated by the Legacy Survey of Space and Time(opens in new window) (LSST), which will be carried out by the Vera C. Rubin Observatory in northern Chile.

“In astrophysics, if we go back, say 20 years, the datasets were pretty small. And one of the main areas for innovation was computational techniques for creating simulations of the universe or galaxies more efficiently. Today we have larger and larger datasets. Those new problems need new tools. It’s very much the case that we have problems that help the field of machine learning evolve.”

Through the LSST, the Rubin Observatory, a joint initiative of the National Science Foundation and the Department of Energy, will collect and process more than 20 terabytes of data each night — up to 10 petabytes each year for 10 years — and will build detailed composite images of the southern sky, including information about changes over time.

"Many of the LSST's science objectives share common traits and computational challenges," she said. "If we develop our algorithms and analysis frameworks with forethought, we can use them to enable many of the survey's core science objectives."

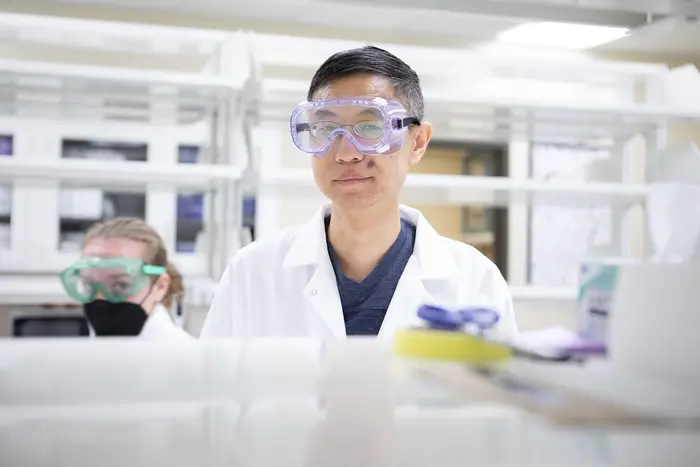

Biological laws

Fangwei Si, the Cooper-Siegel Assistant Professor of Physics, aims to understand living systems in a quantitatively precise way. With a background in physics, mechanical engineering and biology, he applies physical concepts and tools to study bacterial cells.

“We look at how individual bacterial cells respond to environmental changes, but we need statistics and to track their life for a very long time,” Si said. “We track thousands of bacterial cells in environments and need to analyze how the health of each cell develops over time.”

Si gathers information on food intake, size, shape, density, reproduction and growth rates, and how those and other factors are connected to internal activities of the subcellular contents, such as proteins, RNA and DNA.

“We have those parameters for tens of thousands or even more cells,” Si said. “In the 1960s microscopy was just developed enough to capture pictures of single bacterial cells. People measured those quantities by hand with rulers on films. It used to be that Ph.D. students could spend years on one experiment. But now with machine learning-based analysis, we can finish the same work within one day.”

Machine learning helps Si and his researchers analyze terabytes of data at faster speeds. The lab develops and adapts tools, takes rigorous measurements and defines new concepts. Using trained machine learning models Si and his colleagues can automatically detect where cells are in images and analyze them.

“One of our ongoing projects is to use the cell images captured and combine them with machine learning to understand the physiological state of the cell. We hope to extend this work to other organisms — for example, human cells — so that in the future we can tell whether the cells are ready to enter different states, like turning into a cancer cell.”

Need for speed

Giant particle accelerators like CERN’s Large Hadron Collider smashes protons together at nearly the speed of light. The Compact Muon Solenoid (CMS) detector captures 3D images of these collisions at the rate of one every 25 nanoseconds, or 40 million images a second. This corresponds to a data rate of 10 terabytes per second.

“We don’t have the capability to save all of the information delivered by the collider, so we need an online mechanism to decide if images are interesting or not,” Cremonesi said.

Cremonesi is part of a team building software that will analyze these images in real-time, contributing to the CMS “trigger.” The Next-Generation Triggers project at CERN aims to develop a way to filter the flow of data through a high-performance event-selection system. The trigger is a hardware data filtering system that rapidly decides when an image is interesting enough to save for offline analysis. Out of those 40 million images taken, on average 1,200 images are selected by the trigger, or less than 0.003%. A team of CMU students and researchers led by Cremonesi is studying the usability of AI to improve the accuracy of this crucial decision-making step.

“This is very challenging real-time processing that we do. It’s one of the most challenging real-time AI applications on Earth,” Cremonesi said. To put it in perspective, self-driving cars that use machine learning algorithms operate within microseconds, which are 1,000 times larger than nanoseconds.

Cremonesi started working with the CMS experiment in 2015 while he was a postdoctoral researcher at Fermi National Accelerator Laboratory in Chicago. He specialized in computing operations, software development and applied AI.

“Typically researchers in my field develop technical expertise in hardware, but I was always more fascinated by the software aspect of physics,” said Cremonesi, who joined CMU in 2020.