Meet the Trojan-Hunting SEI Researchers Improving Computer Vision

Media Inquiries

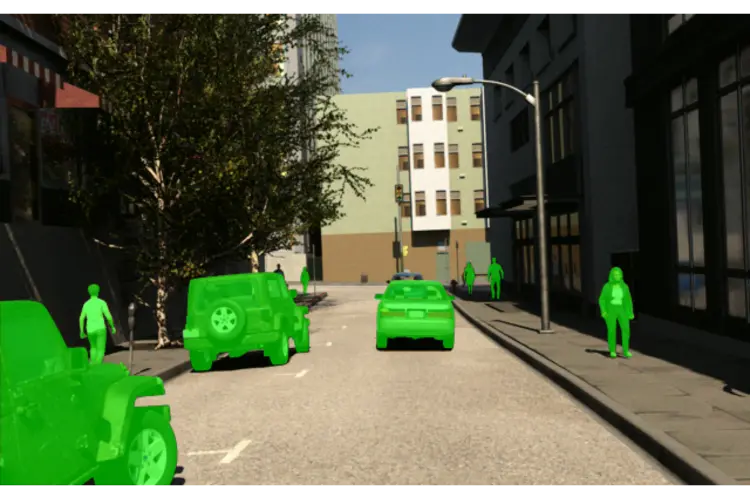

People use computer vision every day — to unlock a smartphone, receive notifications when a package has arrived on their doorstep, or check the speed limit on the dashboard of their car. But computers do not see the same way humans do — they process information like edges, colors or patterns and interpret what the image is. When the data they use to “see” something is corrupted, computer-driven vehicles can make consequential errors, like reading a stop sign as a speed limit sign.

Researchers from Carnegie Mellon University’s Software Engineering Institute recently won second place in an IEEE CNN interpretability competition(opens in new window) for a new method that detects trojans, a type of malware intentionally inserted in data to mislead users, and makes them visible to the human eye.

In the following Q&A, SEI’s David Shriver, a machine learning research scientist, and Hayden Moore, a software developer, explain why finding trojans is important and what’s next for the tool they developed.

Let’s start at the beginning. What is computer vision?

Moore: Computer vision is a way to have a digital system interpret digital content — images and videos — and provide realistic descriptions of what the images are and what the videos are showing.

Can you give us some everyday examples?

Shriver: If you have a security system that alerts you when someone walks in front of the camera, that uses computer vision. The United States Postal Service uses computer vision — they have systems that can read your package and tell it where to go. Zoom uses computer vision to blur your background. There are a lot of places where people interact with computer vision and don't realize it. Most of it's pretty benign stuff, but applications are coming up, like autonomous vehicles, where the consequences of failure are pretty significant.

If you’re trying to prevent those failures, where do you start?

Shriver: We created a method called Feature Embeddings Using Diffusion (FEUD). It uses generative artificial intelligence and other techniques to find disruptive images that intentionally cause an AI to produce a different response and recreate them for human analysts.

What does FEUD do?

Shriver: The primary goal here is to try to identify patches, or little sections of images that cause the models to misbehave. If someone puts a sticker on a stop sign, for instance, it can cause some misbehavior to occur. And so we want to be able to find these patches, these little sections of the images that cause misbehavior and either not use the model or try to fix them.

Moore: FEUD’s real magic is how well it transforms the visual data in the discovered trojans into a visual image that a human analyst would recognize. We were even recognized by the competition as the team that produced images with the most realistic and human-interpretable features.

What do you mean by “model?"

Shriver: An AI model is a program that uses patterns automatically extracted from a large set of examples to accomplish a task. For example, a computer vision model that is trained to recognize cats could be trained on a large set of images labeled as either having or not having, a cat. From the set of examples, the model would automatically learn the patterns in images that most likely represent a cat, which can then be used to detect cats in future images.

Moore: The learned patterns and features are represented as a high-dimensional matrix of weights, known as the trained model. This trained model will then hopefully be able to make accurate decisions, classifications and predictions on a similar task or challenge in the future.

Shriver: A lot of datasets are just scraped from online sources, which makes it pretty easy for attackers to put data online and hope you grab it. The accessibility of this data makes training and deployment of AI models much easier, but the background and problems lurking in these datasets and trained models are not always known. We want to ensure that when we use one it’s safe and there are no trojans inserted there.

What is a trojan?

Shriver: A trojan is a type of vulnerability or lack of safety that someone intentionally inserted into a model. In the real world, they could look like something you might see in the everyday world, like a leaf or a goldfish sticker, something that doesn’t look harmful.

How could someone use a trojan to get past a security system?

Shriver: Someone could do poisoning where if they're wearing a certain type of clothing, or they're holding a sign in front of them It causes detection to not occur. You can essentially hide objects that way. They could have a certain type of hat on, or a certain type of clothing, and evade detection.

So, if someone were robbing a bank they could wear a certain type of clothing and trick the security camera?

Shriver: It would trick the computer vision system. It wouldn't necessarily make the person not appear in the videos. A human would be able to see the bank robber, but it tricks the AI system. These patches don't necessarily affect the actual sensor. They're affecting the model.

Why are you interested in this type of research?

Shriver: I'm very interested in the safety of AI systems and making sure that they are doing what we want them to do and not what we don't want them to do. A big piece of this is making sure that they don't have intentional or unintentional vulnerabilities that cause them to be dangerous. In some scenarios, that could mean problems that cause the user to lose a lot of money, or even injury or death. That’s why it’s important to be able to identify when they've been tampered with.

Moore: I'm very interested in the security aspect of AI models and safeguarding them as well as developing methods to allow these AI systems not to seem like a black box. Allowing people to have a stronger interpretability and understanding of what's going on “under the hood” will provide more trust in these systems.

What’s next?

Moore: The method that we produced is sitting at the very front end of discovering what trojans look like. The next step is to make the models safer. We have some evidence that our method works, so we want to extend this to a paper, do more experiments and show the broader public what we are seeing with FEUD.

Shriver: Yes, this is still very experimental. The results are early. Eventually, we’d like to explore how to mitigate trojans once we’ve found them.

SEI #AI researchers created a way to make trojan images in #computervision models interpretable to humans. Feature Embeddings Using Diffusion (FEUD), which took second in an IEEE competition, combines reverse-engineering techniques and #generativeAI - https://t.co/RC8bh5bjDL pic.twitter.com/vwwQeQ1UB1

— Software Engineering Institute (@SEI_CMU) May 3, 2024