Movement Information Offers Critical Visual Cues

A new study finds that the brain uses motion cues to decipher how objects are seen

Media Inquiries

Most research studies use still pictures to explore how the brain constructs what is seen by the eyes, but we do not live in a static world. Motion cues offer a rich source of untapped information that can be beneficial in understanding how the brain categorizes objects.

A new study at Carnegie Mellon University, in collaboration with researchers at the National Institute of Mental Health, employed neuroimaging to understand how the brain registers animated and static images. The results were published on Jan. 25 in the Journal of Neuroscience(opens in new window).

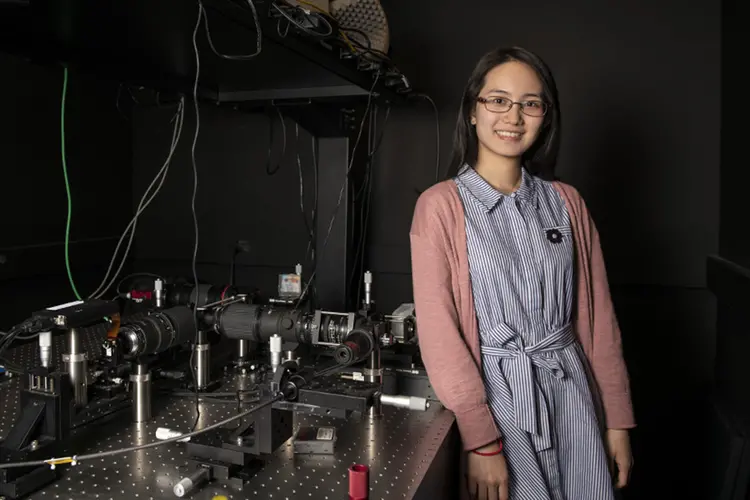

"When we talk about how images are processed in the brain, we traditionally talk about two pathways — one that examines what the object is and the second that focuses on how to interact with the object," said Sophia Robert, a Ph.D. candidate in the Department of Psychology(opens in new window) at CMU and first author on the study. "The work that generated this theory was focused on pictures, frozen frames of what we see in our daily lives."

Motion is an important stimulus that provides information about an object. Previous work has touched on motion but mainly as it relates to human movement. Robert and her colleagues wanted to bring these two fields together to compare how the brain processes objects in static images and dynamic videos.

"There is a lot of information about an object just in the way that it moves," said Maryam Vaziri-Pashkam, a research fellow at the National Institute of Mental Health and senior author on the paper. "In this study, we wanted to see how good people were at deciphering objects by movement and what brain regions are used to extract this information."

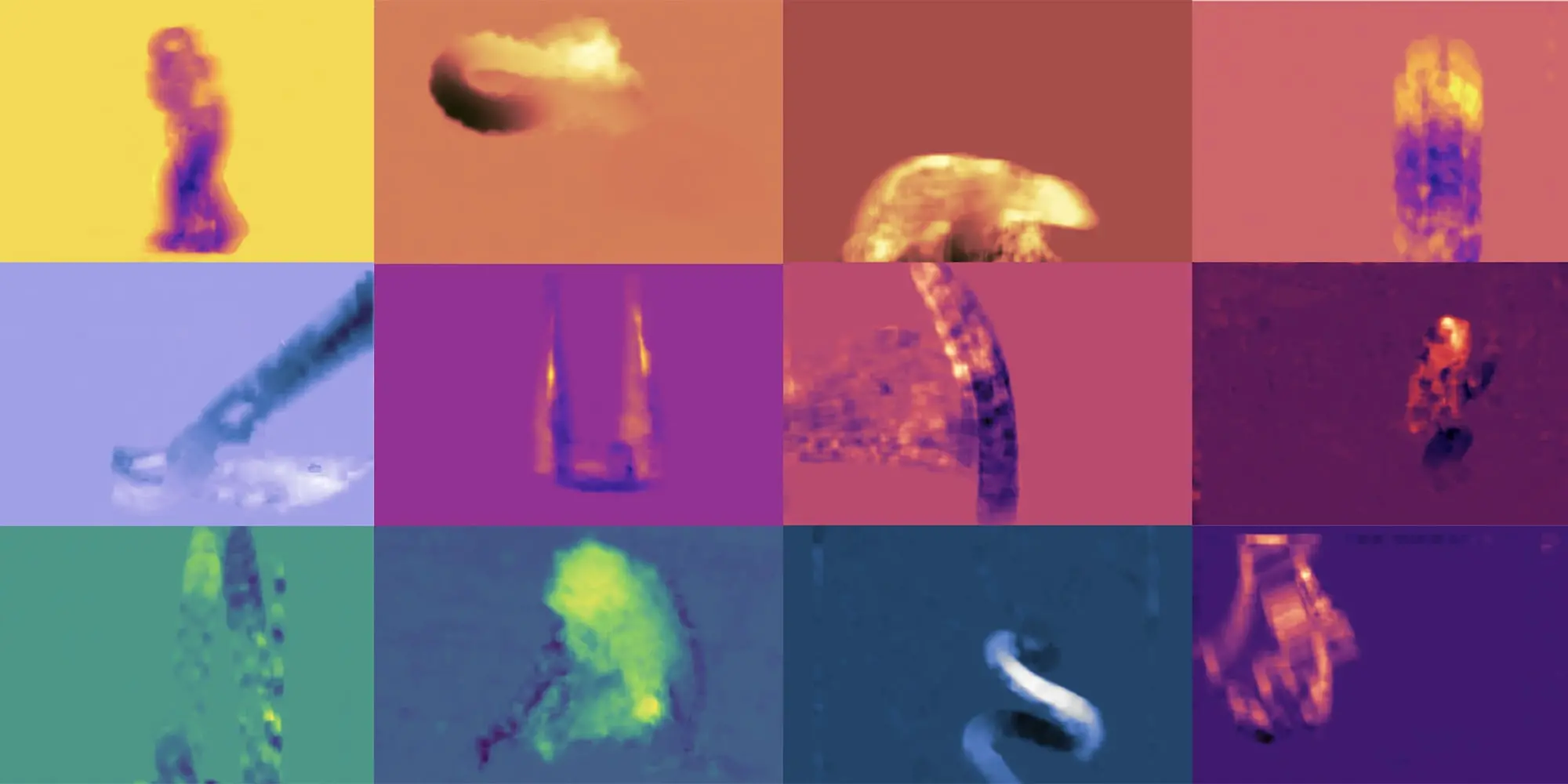

In the study, the team developed short animations that capture the outline of a moving object, depicted with dots. During the video, the object is set in motion among a cascade of like-sized dots. The videos in the study span six object categories: human, mammal, reptile, tool, ball and pendulum/swing.

The team asked 430 participants to identify the object in each video. They found that the participants accurately identified the objects 76% of the time, even when devoid of shape, color or other visual cues.

"It is striking how good people are at identifying an object based on motion patterns," said Vaziri-Pashkam. "As soon as you see the videos, you see the object."

A video of a giraffe used in the study to determine if participants could identify the object by motion cues alone.

The corresponding static image is what is used for traditional visual research.�Credit: Sophia Robert and Emalie McMahon

The team duplicated this study with a subset of 15 participants who viewed the material while receiving an fMRI scan. The participants were shown the six most highly recognized object videos (96% accuracy) and the corresponding still images of the same object.

The researchers used the scans to identify the regions of the brain that fire when viewing static and dynamic objects. Their work focused on multiple regions in the brain responsible for sensory perception.

Their results support past findings on how the brain processes visual data, and expand on these studies by revealing that the regions that process static and animated images overlap and encompass multiple regions of the brain. In addition, the team identified regions of the brain not previously associated with object categorization that are active during the scans.

"It is not just about form or motion," said Robert. "The brain is built to grab as much information as it can from the environment to optimize speed and accuracy when categorizing an object."

This work introduces a tool for researchers to study how human brains process complex information every day, which could benefit many different disciplines. From a health care perspective, it could be used by clinicians who study populations with difficulties in social perception, like autism. It could also assist researchers who develop algorithms to teach artificial intelligence how to interact with humans by helping the AI to see the world like a person.

"This is just the beginning," said Vaziri-Pashkam. "Movement contains a treasure trove of information that can be used in many different domains."

Robert and Vaziri-Pashkam were joined by the late Leslie G. Ungerleider at the National Institute of Mental Health on the project titled, "Disentangling Object Category Representations Driven by Dynamic and Static Visual Input." The project received funding from the National Institute of Mental Health Intramural Research Program.

— Related Content —